Researchers at the Fermilab National Accelerator Laboratory are using Amazon Web Services' cloud to help better understand neutrinos and to get a glimpse at what these elusive particles looks like.

The Brexit Effect: 8 Ways Tech Will Feel The Pain

The Brexit Effect: 8 Ways Tech Will Feel The Pain (Click image for larger view and slideshow.)

Neutrinos are everywhere, and pass through our bodies continuously, but these subatomic particles are notoriously difficult to detect. To do so, the Fermi National Accelerator Laboratory's (Fermilab) facility in Ash River, Minn., enlisted the help of Amazon Web Services' cloud to help capture and analyze neutrino data.

Ash River is the home of the Fermilab's Far Detector, which was created to help capture a trace of passing neutrinos and show their state. The task is more complicated than it sounds, because neutrinos can "oscillate" or change their identities as they travel through time and space, according to Sanjay Padhi, an adjunct professor of physics at Brown University and a researcher in the Scientific Computing unit of AWS.

The experiments include beaming neutrinos directed at the Far Detector from a site 350 feet underground at the Fermilab's main facility in Batavia, Ill., outside of Chicago. (Fermilab is run by the US Department of Energy.)

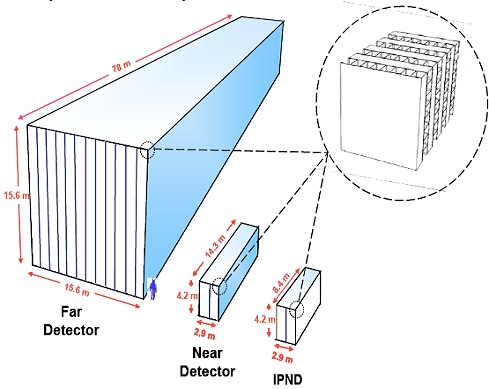

The Far Detector in Ash River is the first scientific instrument built to capture the oscillations of neutrinos after they have traveled 500 miles underground. It weighs 14,000 tons, and it's located deep underground in Northern Minnesota, in line with the beam sent from Fermilab's Batavia base.

Padhi wrote about Amazon's role in the experiment conducted at the Ash River site in a guest post to AWS's chief evangelist Jeff Barr's blog on July 5. The experiment so far involves 200 scientists from 41 universities and research institutions.

Neutrinos are known to come in three varieties -- muon, tau, and electron neutrinos -- and these particles are known to form different combinations. But scientists are still trying to determine the mass of each combination and understand how they oscillate as they travel.

The NOvA experiment, or NuMI Off-Axis vs. Appearance effort, is design to collect data that may shed more light on those transitions.

"As the neutrinos travel the distance between the laboratories, they undergo a fundamental change in their identities. These changes are carefully measured by the massive NOvA detector," Padhi wrote in the blog.

The Ash River detector is 53 feet high and 180 feet long. "It acts as a gigantic digital camera to observe and capture the faint traces of light and energy that are left by particle interactions within the detector," Padhi wrote.

The experiment would put a strain on many computer clusters because it is capturing "two million 'pictures' per second of these interactions." The data is analyzed by sophisticated software, allowing individual neutrino interactions to be identified and measured, Padhi wrote.

Without the detector and software analysis, the neutrinos remain ghostlike particles.

Results from the NOvA experiment are currently being presented at the 27th International Conference on Neutrino Physics and Astrophysics in London, which is taking place between July 4 and July 9.

[Want to see how AWS helped researchers using the Large Hadron Collider? Read Brookhaven Lab Finds AWS Spot Instances Hit Sweet Spot.]

The more scientists can learn about neutrinos, the better they figure they can understand the physics of the universe, with its many unanswered questions.

Neutrinos are associated with the early formation of the universe, and understanding how they change mass as they combine could provide a key to how the universe has evolved, according to a fact sheet on the experiment.

Three major physics analysis programs ran on AWS to achieve the results that are being presented at the conference. The analysis applications consumed a Gigabyte of data per core hour of analysis and yield a gigabyte of physics output. They ran on 7,500 cores derived through the EC2 Spot Market, which lets a low bidder use a cluster as it becomes free of other, higher-paying uses.

The analysis programs consumed a total of 400,000 core hours and were able to double their processing capacity when needed to meet critical periods of the experiment, Padhi wrote. The analysis applications ran for a span of several months.

About the Author(s)

You May Also Like