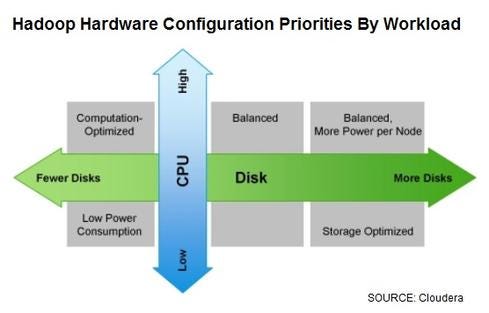

Hadoop is known for running on "industry standard hardware," but just what does that mean? We break down popular options and a few interesting niche choices.

12 Slides

-

About the Author(s)

Never Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.

You May Also Like

More Insights

Webinars