Faceoff: Auditable AI Versus the AI Blackbox Problem

For some, auditable AI is the same as explainable AI. For others, it means something entirely different. Meanwhile, FICO is using it to determine your credit scores. Here’s what all that means.

Fears surrounding artificial intelligence are all based on one of two types of ignorance. The first is borne from imagination and sci-fi lore in the heads of those who do not understand what AI is and isn’t. The second is borne from a true lack of information, specifically the inability of anyone skilled in AI to see what models are doing to arrive at individual decisions -- the infamous and impenetrable AI black box. Companies that can’t see how AI is making each decision are at risk financially, reputationally, and legally. The prevailing atmosphere of ignorance -- one formed from illogical fear and the other from a lack of information -- is untenable. But breaking the AI black box seems undoable. And yet remarkable progress has been made in making AI auditable -- an important step in coaxing AI to reveal its secrets.

“The notion of auditable AI extends beyond the principles of responsible AI, which focuses on making AI systems robust, explainable, ethical, and efficient. While these principles are essential, auditable AI goes a step further by providing the necessary documentation and records to facilitate regulatory reviews and build confidence among stakeholders, including customers, partners, and the general public,” says Adnan Masood, PhD, chief AI architect at UST, a global provider of digital solutions, platforms, product engineering, and innovation services. Masood is also a visiting scholar at the Stanford AI Lab where he works closely with academia and industry.

But how can auditable AI exist in the absence of explainable AI? How can you audit something you can’t track?

“The answer to these questions depends on whether you’re talking simply about auditing AI inputs and outputs, because that is easy. Or are we talking about auditing for transparency and explainability of the AI itself, which is very difficult?” says Kristof Horompoly, VP, AI Risk Management at ValidMind, an AI model risk management company.

Time to take a hard look at AI auditing options and consider FICO as a case study for succeeding by creating their own unique path to auditing AI to safeguard everyone’s credit scores.

AI Auditing Options

First, consider that not all AI systems are created equally, and neither are their builders or users. This means that auditing types, methods, and levels of difficulty can and do vary.

“There are a few different types of AI audits. One type is a fairly standard organizational governance audit where auditors will be looking for adherence to an organization's own AI policies,” explains Andrew Gamino-Cheong, CTO & co-founder at Trustible, a provider of responsible AI governance software.

As an example, Gamino-Cheong points to the recently published ISO 42001 standard, which he says is “heavily focused on this kind of organizational level audit.” This type of AI audit, he explains, consists of showing paper trails of approvals, good quality documentation, and evidence of appropriate controls.

“In contrast, there is also a highly technical, model-level audit. For example, the NYC Local Law 144 for AI bias in hiring system requires a model-level audit where the developer must prove that their model passes certain statistical tests,” Gamino-Cheong adds.

Methods vary as well, but generally come from two main approaches: input and output logs, or training data governance.

“There are two sides of auditing: the training data side, and the output side. The training data side includes where the data came from, the rights to use it, the outcomes, and whether the results can be traced back to show reasoning and correctness,” says Kevin Marcus, CTO at Versium, a data tools and marketing platform provider.

“The output side is trickier. Some algorithms, such as neural networks, are not explainable, and it is difficult to determine why a result is being produced. Other algorithms such as tree structures enable very clear traceability to show how a result is being produced,” Marcus adds.

Today, many companies -- specifically those in less regulated industries -- rely on I/O logs as their audit trail.

“To me, an audit trail just means there’s a log of what happened when, but it [the log] won't go into what happened between the input and the output,” says ValidMind’s Horompoly.

In other words, the black box, which contains everything that happened between the input and the output, remains intact. In this method, it is the results in relation to the query that are audited, not the calculations that rendered the output. Auditing what happens there remains the supreme challenge. It’s even more of a challenge in generative AI models.

“Traditional machine learning models, like linear regression and decision trees, are easier to audit and explain due to their transparency. In contrast, large language models (LLMs) using neural networks are more opaque, making it challenging to trace and understand their decision-making processes,” says Stephen Drew, chief AI officer at RNL, a provider of higher education enrollment management, student success, and fundraising products and services.

“For this reason, we try to avoid cracking walnuts with sledgehammers, meaning that we use LLMs when needed but always strive to solve problems with AI models using the simplest and most explainable architecture for the task,” Drew adds.

FICO Scores a Win

Despite the challenges, some companies are making serious headway in a number of novel approaches.

Take for example, FICO, the company the world relies on for credit risk scoring services. The company started working with analytics and massive amounts of data as far back as the 1950s. The now famous FICO scores hit the scene around 1989, some seven years after the company started releasing neural network technologies to detect financial fraud. To say that FICO has a long history in operationalizing AI, machine learning, and analytics is an understatement.

Today, Scott Zoldi is the chief analytics officer at FICO after working up the ranks over the past 25 years and counting. By all accounts, he’s an extremely active AI researcher. During his quarter century of service at FICO, he has focused on the development of unique algorithms to meet specific AI and machine learning requirements for its customers, which are largely financial institutions. He also authored about 138 patents for FICO in that same amount of time.

“Back when I was a student, it was all about trying to find order in chaos. And that kind of describes my daily life here at FICO sometimes with data. I have this fundamental belief that behind all data is structure. And it's up to us to find that structure that explains behavior. And if we can do that, then you have models that are predictive, and you can use these tools to make decisions,” Zoldi says.

But that, of course, is easier said than done. After years of working with various types of AI, FICO has honed a unique method of developing AI models that meet strict criteria in accountability and fairness before they are deployed.

“We have a very narrow path of how we develop models, because I can’t have 400 data scientists building models in 400 different ways, right? They have to be built to a standard. And that standard then is codified on the blockchain,” he explains.

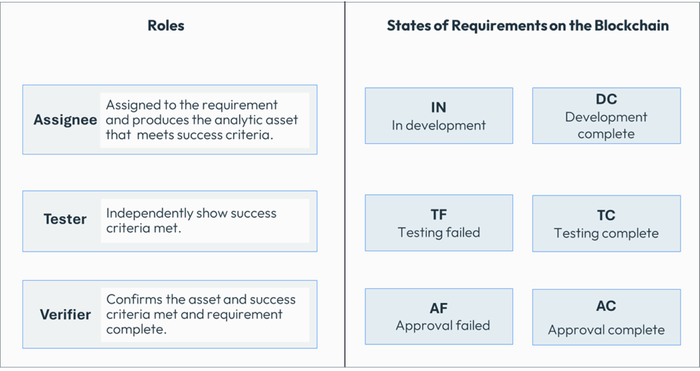

The process FICO uses goes like this:

A project has started requiring an AI model to be built.

There is a set of 28 different requirements that must be met by each new model in order to be in compliance with FICO’s own model development standard and prior to the new model’s release.

The requirements and the subsequent verifications of compliance are entered on the blockchain.

Once all requirements are met, tested, and validated, then the AI model is deployed.

“I assign who’s going to do the work, who’s going to test that each task was done properly, and who’s going to verify that both the AI scientist and the tester did their work properly? All of that information is on the blockchain,” Zoldi says.

“Now, if it’s done incorrectly, it’s on the blockchain. If it’s done correctly, it’s on the blockchain. But it is an immutable record of exactly how the model development standard was followed and where the success criteria is met or not. And we can turn that over to a governance team, to a regulator, or to a legal team to inspect so that there’s no debate about what’s in the model,” Zoldi adds.

If a model fails at any step it is returned for further evaluation or revisions, or it’s discarded. An AI model is not deployed unless it passes on every step.

As the modeling project progresses, the requirement goes through various stages culminating into an approved deliverable or a rejected model. Graphic provided by FICO.

Where Auditable AI Stands Now

“The benefits of auditable AI are significant. We’re looking at increased transparency and trust, better risk management, improved regulatory compliance, enhanced ability to identify and correct biases, and greater accountability in AI decision-making,” says Vall Herard, CEO at Saifr, an AI fintech company.

“However, we must also acknowledge the challenges. The complexity of AI systems, especially LLMs, the resource-intensive nature of audit tools, the rapidly evolving field requiring constant updates, lack of standardized procedures and the need for specialized expertise are all hurdles we face,” Herard adds.

Developing explainable AI remains the holy grail and many an AI team is on a quest to find it. Until then, several efforts are underway to develop various ways to audit AI in order to have a stronger grip over its behavior and performance. Unfortunately, there aren’t enough people working on this issue.

“Auditable AI is the starting point of all forms of existing and future AI regulation. Unfortunately, its existence is also far too rare outside of advanced data science teams in heavily regulated industries like financial services, insurance, and biopharma,” says Kjell Carlsson, PhD, head of AI strategy at Domino Data Lab.

There’s been a lot of lip service about Responsible AI which far too often turns out to be little more than a lot of marketing hot air. Keep an eye out for proof that the AI being used is performing as billed.

About the Author

You May Also Like