Risks and Strategies to Use Generative AI in Software Development

What steps should IT leaders take to ensure their developers use generative AI in ways that maintain security and data control?

As some developers discovered earlier this year, drafting generative AI to handle a bit of coding is not always simple or secure -- but there are ways for dev teams to put it work with greater confidence.

Back in April, Samsung staffers put some of the company’s proprietary code into ChatGPT, later realizing the generative AI retains what it gets fed in order to train itself up. That caused a kerfuffle with concerns of exposure though it made other companies aware of risks that can occur when using generative AI in development and coding.

The idea is simple enough -- let AI create some code while developers focus on other tasks that require their attention. Astrophysicist Neil deGrasse Tyson spoke in June about some of the benefits of using AI to crunch data on the cosmos and free him up for his other duties. It seems a well-thought strategy can see generative AI play a productive role in software development, as long as there are guardrails in place.

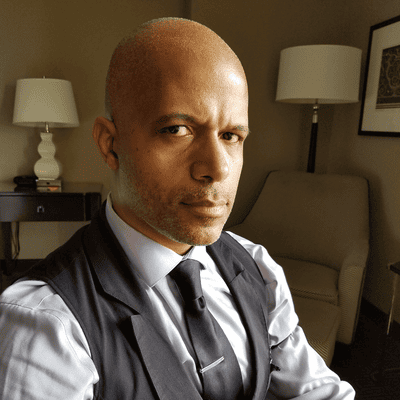

Josh Miramant, CEO of data and analytics firm Blue Orange Digital, sees increasing inevitability in AI usage among developers. “We’re sitting at kind of an interesting crossroads right here of what the future of how LLMs (large language models) and generative AI are going to be applied in mass adoption.”

The rise of ChatGPT set off a race to develop more generative AI rivals, but the rather “open” nature of some generative AI is not well-suited for sensitive, proprietary corporate use. Miramant says companies can put AI to work in software development with certain guidelines in place. “One kind of transition that will happen is these companies will start using open-sourced models that they’ll train and use internally with considerably more restrictive access,” he says. “The walls have to be pretty steep for a corporation to go all-in on training on very proprietary corporate data.”

Among the risks of using AI in software development is the potential that it regurgitates bad code that has been making the rounds in the open-source world. “There’s bad code is being copied and used everywhere,” says Muddu Sudhakar, CEO and co-founder of Aisera, developer of a generative AI platform for enterprise. “That’s a big risk.” The risk is not simply poorly written code being repeated -- the bad code might be put into play by bad actors looking to introduce vulnerabilities they may exploit at a later date.

Sudhakar says organizations that draw upon generative AI, and other open-source resources, should put controls in place to spot such risks if they intend to make AI part of the development equation. “It’s in their interest because all it takes is one bad code,” he says, pointing to the long-running hacking campaign behind the Solar Winds data breach.

The skyrocketing appeal of AI for development seems to outweigh concerns about the potential for data to leak or for other issues to occur. “It’s so useful that it’s worth actually being aware of the risks and doing it anyway,” says Babak Hodjat, CTO of AI and head of Cognizant AI Labs.

One thing to consider, he says, is that publicly available code does not mean the code is not copyrighted, which could be problematic if a company puts that code into a line of its own software products. “We need to really, really be mindful and be careful not to fall into that pitfall,” Hodjat says.

Along with monitoring for bad code, he says organizations should double-check to make sure that code from generative AI is not copyrighted or propriety, a process that might actually be assisted by other AI. “These elements are actually much better at criticizing than they are in producing, and they’re already really good at producing text or code,” Hodjat says.

Sudhakar concurs that an open-source inspection tool should be put in place to inspect code from AI for potential copyright or intellectual property, “whether you have software people to inspect that and depending on your level of investment.”

There are also ways to leverage publicly available generative AI, Hodjat says, while reducing certain risks. This can include downloading an open-source large language model and running it locally. That way sensitive data and code does not leave the organization. “Being able to actually run these models on-prem is going to allow us to make use of these systems to augment coding much more,” he says. For certain proprietary code, however, AI might see complete bans.

As the allure of AI continues to grow, IT leaders will need to be vigilant about how their teams use it. “Because it’s so useful, it’s very tempting to copy paste code over an API and it’s a big no-no,” Hodjat says. “We don't do that just like you wouldn’t be putting code in e-mail and sending it out to someone outside the organization. It’s the same thing.”

Looking long term, he says software development through generative AI has the potential to become even easier. The programming language of choice for generative AI may move away from the likes of Python to natural languages such as English, Hodjat says, potentially making development as simple as giving a description of the desired software. “I think it’ll be very interesting for us as we move towards not having to actually write code and being just simply able to describe the functionality and have the system,” he says.

What to Read Next:

How to Create a Generation of Super Developers with AI

DOS Won’t Hunt: Is AI Better Than Low Code/No Code for Developers?

Is Generative AI an Enterprise IT Security Black Hole?

Pairing AI with Tech Pros: A Roadmap to Successful Implementation

About the Author(s)

You May Also Like