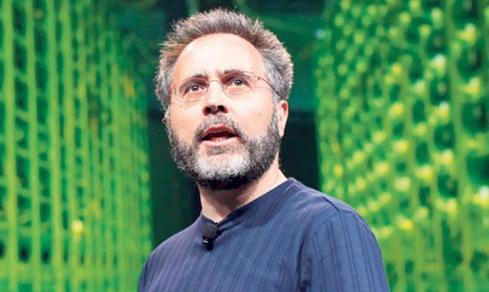

Google's Urs Hoelzle: Cloud Will Soon Be More Secure

Google's chief data center architect, Urs Hoelzle, says cloud security will improve faster than enterprise security in the next few years.

.png?width=700&auto=webp&quality=80&disable=upscale)

Cloud Certifications To Boost Your IT Skills

Cloud Certifications To Boost Your IT Skills (Click image for larger view and slideshow.)

Google has pioneered key features of cloud computing, including chiller-less data centers, broader use of Linux containers, and the big data system that was the forerunner of NoSQL systems. Far from resting on its laurels, Google's Urs Hoelzle, senior vice president of technical infrastructure, said, "All the innovations that have happened so far [are] just a start."

Hoelzle made that pronouncement during the morning keynote address to Interop attendees at Mandalay Bay in Las Vegas on Wednesday, April 29.

And two areas that will show the greatest innovation over the next five years will be in cloud security and container use.

Cloud security will soon be recognized as better than enterprise data security because the cloud, by design, "is a more homogenous environment," he said. That means IT security experts are trying to protect one type of system, replicated hundreds or thousands of times, as opposed to a variety of systems in a variety of states of update and configuration.

Figure 1:

Google's Urs Hoelzle

In contrast, where one complex system has many different types of interactions with another complex system "little holes appear" that are hard for security experts to anticipate in every case.

Hoelzle said that the use of encryption on-the-fly and of scanning systems trained to look for threats and intruders is already in place, and will be extended over the next few years in Google's cloud operations.

In an interview afterward, he said the mapping of systems -- so that a cloud data center security system knows which application talks to which application, what policies are governing, who can access what data, etc. -- will give security experts an auditable tool with which to maintain security in depth. "You only have to get it right once and it's right every time," Hoelzle observed.

[Want to learn more about the Google Cloud Platform? See Google Turns Up The Heat On Amazon.]

In addition, for cloud users, the software changes in cloud systems occur behind APIs, so there's no fresh software at the surface in which an attacker may detect a vulnerability and exploit it. "There's no mistake on installation," that a hacker can see when the software sits behind an API, Hoelzle said.

"We run a large cloud that gets attacked every day," he said. After 15 years in which the company has

Continued on next page.

learned from its mistakes, "I'd say our track record has been very good."

Over the next few years, sensitive enterprise systems may more easily prove to be in compliance if they're running in the cloud, as opposed to on-premises, he asserted in his keynote. He cited a mock auditor's report that said a given enterprise system would have been clearly in compliance on the Google Platform, but "since you're running it yourself, I will have to flag it as a system at risk."

Google remains one of the world's largest users of Linux containers. Google software engineers were instrumental in producing Linux control groups -- some of the building blocks of containers that can keep one application from stepping on the toes of another on the same server.

Google has made its container-scheduling and deployment system, Kubernetes, into an open-source code project that will be able to manage Docker containers.

Hoelzle said an enterprise that adopts Kubernetes for container management on-premises will have no problem moving workloads running in it to the Google Cloud Platform, where containers are managed by Google's own Kubernetes Container Engine, an advanced version over the open source code.

"The configuration files, the packaging files -- the bits are exactly the same," whether a workload is running in a container under Kubernetes on-premises or on the Google App Engine/Compute Engine platform, Hoelzle said in an interview.

Furthermore, enterprises don't need to wait for the container picture to sort itself out further, in Hoelzle's view. "Kubernetes today is pretty functional. It doesn't have all the features we have, such as how to pack the best concentration of containers on a server, but it doesn't need it."

He doesn't view containers and virtual machines as being in an inevitable competition for the same workloads. Each will play a role where appropriate, but he also thinks containers are likely to run a larger share of cloud workloads in the future than they do today.

But clouds that make deft use of containers will have advantages over those that don't, he predicted. For example, a workload at rest, with no requests coming in to it at night, can be stored in a container and incur no charges in the Google platform infrastructure. If requests do show up, the workload is quick to instantiate because no operating system needs to be launched to get it running. It uses the host's.

Such deployment decisions could easily be left to the discretion of the service provider, with the customer not knowing whether his workload was running in virtual machine, a container, or temporarily stored at rest.

Such a scenario is more difficult to achieve with virtual machines, because the virtual machine's operating system must be retrieved and fired up before anything else can happen. On the other hand, virtual machines with their operating systems and harder logical boundaries are more adept at migrating live from one server to another.

"The customer shouldn't need to care" whether his application is running in a container or virtual machine, Hoelzle noted. Rather, the appropriate vehicle at the appropriate time should be available through the cloud vendor to him.

When it is, however, he's likely to notice a difference between the Google and Amazon Web Services platforms. A task running, when needed on Google Compute Engine, and at rest in a container at other times, is a better deal than an application running in a virtual machine, 24 hours a day. That is particularly true when in the Google instance the charge is incurred by the minute, while in the AWS case the bill is compiled by the hour. The AWS billing mechanism rounds up to the next hour after the first 15 minutes of a new hour are completed

On other issues, Google came out from behind what was at one time a reluctance to disclose details about its infrastructure. Among the most significant, Hoelzle said, is that Google data centers have implemented so many energy and components updates that it does 3.5 times the computing for the energy used than it did five years ago.

All Google data centers combined operate with an average 1.12 power usage effectiveness rating, said Hoelzle. Google calculates a "pessimistic," or highly inclusive, set of factors to calculate PUE, which include the substation if it's on the same grounds as the data center. PUE is the amount of electricity consumed strictly for computing by servers and other equipment, compared to the amount brought into the data center. A rating of 1.0 would indicate all power was being used for compute.

The typical enterprise data center has a rating between 1.8 and 2. Facebook claims a PUE of 1.06 for its Prineville, Ore., facility.

Hoelzle had reservations about the effectiveness of PUE as the sole measure of efficiency, but said its progression downward is an indicator of how much progress has been made in a five-year period.

In addition, he named the Google data center in a small town east of Brussels as the first data center in the world that ran without air conditioners, or "chillers" in data center parlance. Instead, it used water extracted from a nearby canal that was filtered, and then drizzled down a knobby screen with ambient air flowing over it. The air was cooled about by 15 degrees by the evaporation of water as it passed over the screen, and then used to cool the data center.

On the coast of Finland, a former paper mill serves as the first data center that's cooled by seawater, he said. The mill employs a tunnel "large enough to drive a truck through" to bring seawater into the building. Google uses the water from the channel in a heat exchanger that cools water piped to the computer systems, in order to cool them.

Hoelzle also showed a picture of the servers that Google first built itself as a cost-saving measure on a corkboard. One of the first corkboard servers from Google is in the computer history museum in Mountain View, Calif. Google stripped redundant fans, power supplies, and other components out of its home-built serves to reduce their cost. It had designed cloud software that tolerated the failure of a server underneath it, and migrated its workloads to another server.

A Google facilities engineer has designed a system that uses machine learning to coordinate all the cooling mechanisms of a data center based on conditions inside and outside. It can determine which variable-speed pumps need to bring more cooling water to the heat exchanger, or it can dictate which fans should work a little harder to keep the temperature at an acceptable level. The system has been applied to about half of Google's data centers and will be in all of them by the end of the year, he said.

Hoelzle is co-author of he pioneering cloud arhitecture book, The Data Center As The Computer.

Interop Las Vegas, taking place April 27-May 1 at Mandalay Bay Resort, is the leading independent technology conference and expo series dedicated to providing technology professionals the unbiased information they need to thrive as new technologies transform the enterprise. IT Pros come to Interop to see the future of technology, the outlook for IT, and the possibilities of what it means to be in IT.

About the Author

You May Also Like