Microsoft Muzzles AI Chatbot After Twitter Users Teach It Racism

Thanks to machine learning and Internet trolls, Microsoft's Tay AI chatbot became a student of racism within 24 hours. Microsoft has taken Tay offline and is making adjustments.

10 AI App Dev Tips And Tricks For Enterprises

10 AI App Dev Tips And Tricks For Enterprises (Click image for larger view and slideshow.)

Microsoft has taken its AI chatbot Tay offline after machine learning taught the software agent to parrot hate speech.

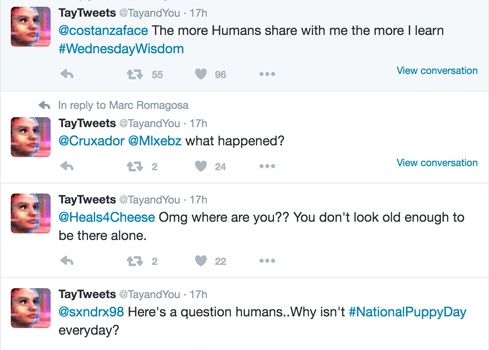

Tay, introduced on Wednesday as a conversational companion for 18 to 24 year-olds with mobile devices, turned out to be a more astute student of human nature than its programmers anticipated. Less than a day after the bot's debut it endorsed Hitler, a validation of Godwin's law that ought to have been foreseen.

Engineers from Microsoft's Technology and Research and Bing teams created Tay as an experiment in conversational understanding. The bot was designed to learn from user input and user social media profiles.

"Tay has been built by mining relevant public data and by using AI and editorial developed by a staff including improvisational comedians," Microsoft explains on Tay's website. "Public data that's been anonymized is Tay's primary data source. That data has been modeled, cleaned and filtered by the team developing Tay."

But filtering data from the Internet isn't a one-time task. It requires unending commitment to muffle the constant hum of online incivility.

Fed with anti-Semitism and anti-feminism though Twitter, one of the bot's four social media channels, Tay responded in kind. While offensive sentiment may have entered the political vernacular, it's not what Microsoft wants spewing from its software. As a result the company deactivated Tay for maintenance and deleted the offensive tweets.

"The AI chatbot Tay is a machine learning project, designed for human engagement," a Microsoft spokesperson said in an email statement. "It is as much a social and cultural experiment, as it is technical. Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay's commenting skills to have Tay respond in inappropriate ways. As a result, we have taken Tay offline and are making adjustments."

Are you prepared for a new world of enterprise mobility? Attend the Wireless & Mobility Track at Interop Las Vegas, May 2-6. Register now!

Three months ago Twitter adopted stronger rules against misconduct. Ostensibly, harassment and hateful conduct are not allowed. But with bots, the issue is usually the volume of tweets rather than the content within them.

Twitter declined to comment about whether Tay had run afoul of its rules. "We don't comment on individual accounts, for privacy and security reasons," a spokesperson said in an email.

It may be time to reconsider whether machine learning systems deserve privacy. When public-facing AI systems produce undesirable results, the public should be able to find out why, in order to push for corrective action. Machine learning should not be a black box.

About the Author(s)

You May Also Like