Will Spark, Google Dataflow Steal Hadoop's Thunder?

Apache Spark and Google's Cloud Dataflow service won't kill Hadoop, but they're aimed at the high-value role in big data analysis.

the basics of Hadoop -- the storage layer, management capabilities, and high availability and redundancy features -- as a data platform (just as Dataflow operates on top of the Google Cloud Datastore). But Hadoop vendors are counting on YARN to help them offer all sorts of analysis options on top of that same platform.

With Spark, Databricks is set to argue this week, organizations will be able to replace many components of Hadoop, not just MapReduce. Machine learning and stream processing are the obvious use cases, but Databricks will also highlight its SQL capabilities -- a threat to Hive, Impala, and Drill -- as well as its aspirations for graph analysis and R-based data mining. So what's left to do in other software?

[Want more on the grab for the analytic high ground? Read Pivotal Subscription Points To Real Value In Big Data.]

There's more to the Databricks announcements to be revealed Monday afternoon, but Hadoop vendors were already downplaying the potential impact of Google Cloud Dataflow and Spark last week in public forums and in response to questions submitted in email by InformationWeek.

"Traditional Hadoop's demise started in 2008, when Arun Murthy and the team at Yahoo saw the need for Hadoop to move beyond its MapReduce-only roots," said Shaun Connolly, Hortonworks' VP for corporate strategy, citing the work by now-Hortonworks-executive Murthy to lead the development of YARN. "The arrival of new engines such as Spark is a great thing, and by YARN-enabling them, we help ensure that Hadoop takes advantage of these new innovations in a way that enterprises can count on and consume."

Spark has lots of momentum, acknowledged MapR CMO Jack Norris, but he characterized it as a "very early" technology. "Yes, it can do a range of processing, but there are many issues in the framework that limit the use cases," Norris said. "One example is that it is dependent on available memory; any large dataset that exceeds that will hit a huge performance wall."

Teradata does MapReduce, SQL, Graph, Time-Series, and R-based analyses all on its commercial Aster database, connecting to Hadoop as a data source.

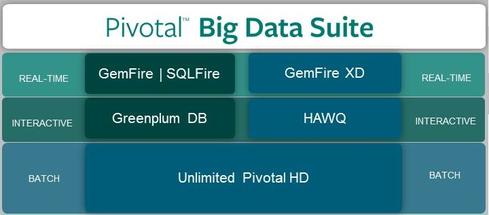

Pivotal's Hadoop platform supports batch processing (meaning MapReduce). Commercial Greenplum and HAWQ support SQL, while GemFire XD supports streaming and iterative, in-memory analysis like machine learning.

It's certainly true that it's very early days for Spark, but its ambitions to be the choice for many forms of data analysis should sound familiar. Teradata and Pivotal, for example, have attempted to stake out much of the same high ground of data analysis with their commercial tools, leaving Hadoop marginalized as just a high-scale, low-cost data-storage platform.

With Teradata, Hadoop is the big storage lake, but the analysis platform is its Aster database, which supports SQL as well as SQL-based MapReduce processing, Graph analysis, time-series analysis, and (as of last week) R-based analysis across its distributed cluster.

Pivotal has its own Hadoop distribution, Pivotal HD, and that's where it handles batch workloads. But for interactive analysis it's touting Greenplum database and the derivative HAWQ SQL-on-Hadoop option. For real-time processing it offers GemFire, SQLFire, and the derivative combination of the two, GemFire XD, which it describes as an in-memory alternative to Spark.

Spark's advantage is that it's broad, open source, and widely supported, including on the Cassandra NoSQL database and Amazon Web Services S3, on which it can also run. Spark's disadvantage is that it's very new and little known in the enterprise community. The promise of a simpler, more cohesive alternative to the menagerie of data analysis tools used with Hadoop is certainly compelling. But it has yet to be proven in broad production use that Spark tools are simpler, more cohesive, and as performant (or more performant) than the better-known options used today.

InformationWeek's June Must Reads is a compendium of our best recent coverage of big data. Find out one CIO's take on what's driving big data, key points on platform considerations, why a recent White House report on the topic has earned praise and skepticism, and much more.

About the Author

You May Also Like