Microsoft Azure Outage: Questions Remain

Microsoft Azure's East and South Central US data centers' performance plummeted while West remained relatively unaffected.

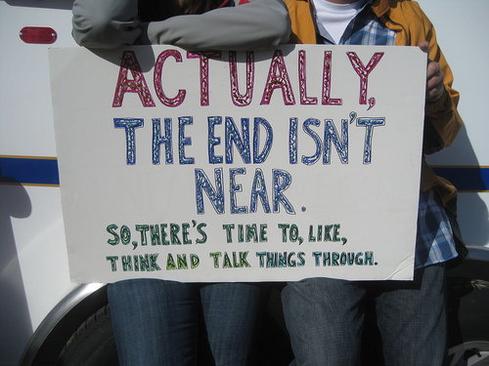

8 Doomsday Predictions From Yesterday And Today

8 Doomsday Predictions From Yesterday And Today (Click image for larger view and slideshow.)

On Nov. 18, Microsoft rolled out an update to its Azure storage service that contained an unintended infinite loop for a certain operation buried in the code. The triggering of that infinite loop in normal operations caused the service to basically freeze. Third-party cloud service monitoring services show that as the update rolled out, each Azure data center experienced a growing service latency followed by an outage about an hour later.

A chart supplied at the request of InformationWeek by former Compuware application performance management service Dynatrace showed a 241.75-millisecond (about a quarter of a second) latency building up before failure -- a disastrous slowdown in storage operations. Earlier that day it had run at an average 15-16 milliseconds.

Likewise, charts obtained from the Cedexis Radar monitoring service showed a consistent 95%-97% success rate in attempted connections to Azure cloud services throughout most of the day. Shortly after 4 p.m. PST -- 45 minutes prior to the time Microsoft acknowledged the trouble in its Azure Status page -- that response level started to fall off a cliff. Over the next hour, it dropped from 95%-97% to 7%-8% -- a virtual freeze for most users.

Interestingly, the Cedexis data also show that the problem didn't affect all Azure data centers equally. In a chart showing three US and two European data centers (below), Cedexis metrics illustrate that a little after midnight UTC (4 p.m. PST), Microsoft's US East (in northern Virginia) and South Central (in Dallas) are affected more than the other three -- their performance drop is the most precipitous. An hour later, US East is down to a 7%-8% level, while South Central has ground to a halt and is accepting no connections. South Central's complete outage goes on until about 6:45 p.m. PST (my estimate from a graph without fine calibrations).

Azure's US West data center in Northern California shows degraded service but continues to chug along at 55%-60% throughout this period.

In an interview, Cedexis service strategist Pete Mastin said any drop in user connections spells trouble for cloud services, since a failed connection will usually be retried right away. That builds unnecessary traffic and tends to increase the failure rate. In other words, it takes only a small decrease in a service's availability to impact its latency rate. In this case, once the trouble started, Azure's latencies built up rapidly.

[Want to learn more about the Azure storage outage? See Microsoft Azure Storage Service Outage: Postmortem.]

What happened with the two European data centers, however, is even more of an anomaly than the varied outcomes in the US data centers.

Azure's West Europe data center (in Dublin, Ireland) and North Europe center (in Amsterdam) initially showed relatively little impact. North Europe fell off a barely perceptible 1%-2% (my estimate, since graph doesn't show fine detail), while West Europe dropped to 80% effectiveness.

All five data centers then start to recover at about the same time -- about 1 a.m. UTC (Greenwich Mean Time) or 5 p.m. PST, and the recovery continued for two hours. At about 3 a.m. UTC (7 p.m. PST), operations were back to normal at both the US and the European data centers.

Then Azure's North Europe data center in Amsterdam suffered a second precipitous drop. From 5 a.m. to 8 a.m. UTC, its user connection rate plummeted from 96% to 37%. Meanwhile, the Dublin-based West Europe center maintained close-to-normal operations, showing a decline of only a few percentage points over the same period.

The performance drop across all data centers makes sense if the storage service update was rolled out simultaneously around the globe -- but, Mastin wondered, why would [Microsoft] do that? Is it considered a best practice to roll out a cloud service update everywhere at the same time? In a statement, corporate VP for Azure Jason Zander said the update had been tested both in isolation and in a limited live production deployment, a process it calls "flighting." The update had passed all tests.

When it became evident there was a problem with the rollout, why did the Amsterdam data center take a second performance dive just as the business day was getting underway in its time zone? If business activity served as the trigger, why didn't Dublin show a similar drop an hour later? Had troubleshooters implemented a rollback there before the code glitch had time to cause major problems?

It's possible. But then there's the question of the varied responses among the US data centers. Why, at 4 p.m., was the US West data center -- still in the most active part of the business day – less affected than the US South Central and US East data centers?

Asked about these variations, Mastin was guarded in his response. Having served previously as operations staff at an Internap data center, he's aware of the many individual circumstances and anomalies that can occur in the course of a system update. "We measure things," he said. "We don't necessarily understand why it happened."

That said, he added, "I worked at Internap for four years. We knew rolling out an update to all data centers at one time was never a good idea."

To collect data, Cedexis has 500 enterprise clients embed JavaScript in pages downloaded by their customers each day. The JavaScript triggers a query from the user's location back to the site visited, capturing response times and reporting the results to Cedexis. The company collects 2 to 3 billion such user samples daily from 29,000 Internet service providers.

Microsoft's postmortem is still to come. Let's hope it can explain the outage and address solutions to prevent similar events from happening again.

Does your resiliency plan take into account both natural disasters and man-made mayhem? If the CISO hasn't signed off, assume the answer is no. Get the Disaster Recovery In The APT Age Tech Digest from Dark Reading today. (Free registration required.)

About the Author

You May Also Like