Expert Analysis: Is Sentiment Analysis an 80% Solution?

Sentiment-analysis technologies aren't perfect. But what critics are missing is the value of automation, the inaccuracy of human assessment, and the many applications that require only "good-enough" accuracy.

Is 80% sentiment-scoring accuracy good? Is it good enough?

Mikko Kotila, founder and CEO of online media analytics company Statsit, says no. He asks, in this blog post, what automated sentiment analysis is good for and answers, "not much." Yet his assumptions and analysis betray common misapprehensions. The questions are valid, yet the facts, reasoning, and conclusion offered, while they may be widely-shared, capture only a small part of the sentiment-analysis picture.

Any look at the sentiment-analysis big picture should start with classification precision. Here, as with every automated solution, human performance creates a baseline for expectations. I suspect that Kotila, in his look at sentiment analysis, overestimates the precision of both human analysis and, in projecting from two vendors' self-proclaimed results to the whole of automated sentiment analysis, the accuracy of the broad set of automated solutions. That's right: in many contexts, many automated sentiment solutions are far less than 80% precise. They're still useful however, because more comes into play than raw, document-level classification precision. There's more to accuracy and usefulness than that one point. Automation advantages typically include speed, reach, consistency, and cost. For a more complete picture, add in accuracy-boosting techniques and look at use cases beyond listening platforms.

So here's my own review of the accuracy question and my take on the usefulness of sentiment analysis.

Sentiment Analysis Accuracy

Do humans read sentiment -- broadly, expressions of attitude, opinion, feeling, and emotion -- with 100% accuracy? Mikko Kotila (who is my Everyman; his impressions reflect those of a significant portion of the market) reports that "leading providers such as Sysomos and Radian6 estimate their automated sentiment analysis and scoring system to be 80% accurate." His own assumption about human accuracy comes out in his statement that the "20% difference statistically is huge and comes with an array of problems." What "20% difference" could he possibly see other than between the automated tools' accuracy and human accuracy? Yet we humans do not agree universally with one another on anything subjective, and sentiment, rendered in text that lacks visual or aural clues, is really tricky, even for people. Sadly, we're nowhere close to perfect.

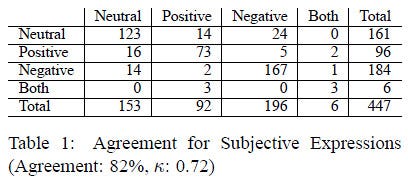

The yardstick is agreement between a method's results and a "gold standard" or, lacking a definitive standard, "inter-annotator agreement" between two automated methods or between a human and a machine. I know of only one scientific study of human sentiment-annotation accuracy, "Recognizing Contextual Polarity in Phrase-Level Sentiment Analysis," by Wilson, Wiebe & Hoffman, 2005.

The Univ. of Pittsburgh researchers found 82% agreement in the assignment, by two individuals, of phrase-level sentiment polarity to automatically identified subjective statements in a test document set. Polarity (a.k.a. valence) is the sentiment direction: positive, negative, both, or neutral.

The authors further report, "For 18% of the subjective expressions, at least one annotator used an uncertain tag when marking polarity. If we consider these cases to be borderline and exclude them from the study, percent agreement increases to 90%."

The Pittsburgh authors evaluated sentiment at a phrase level; other applications look at feature, sentence, and document-level sentiment. Text features include entities such as named individuals and companies; they may also include concepts or topics that are comprised of entities. The situation isn't necessarily better at other levels. (The use cases are different, however, more on which later.)

On the other hand, Bing Liu, of the University of Illinois at Chicago reported to me, "We have done some informal and general study on the agreement of human annotators. We did not find much disagreement and thus did not write any paper on it. Of course, our focus has been on opinions/sentiments on product features/attributes in consumer reviews and blogs. The focus of Wiebe's group has been on political/news type of articles, which tend to be more difficult to judge."

Mike Marshall of text-analytics vendor Lexalytics did his own experimental testing of document-level sentiment analysis and found "overall accuracy was 81.5% with 81 of the positive documents being correctly identified and 82 of the negative ones. This is right in the magic space for human agreement." According to Mike, "Experience has also shown us that human analysts tend to agree about 80% of the time, which means that you are always going to find documents that you disagree with the machine on." So perhaps human sentiment analysis isn't as good as folks suppose; certainly not 100%. Try a few examples yourself. Imagine that you're not just reading, that you're generating data for monitoring/measurement/analysis purposes. Are these tweets positive, negative, both, or neutral -- at the tweet, sentence, and feature [e.g., public option, Obama, GOP, Avatar] levels?

Seattle's hippest pastor says "Avatar is Satan." http://is.gd/aIkfu

Of course, I cherry-picked those examples to illustrate that it's sometimes difficult to assign sentiment polarity precisely. Take the first example. It's not explicitly pro a health care public option, is it, even while it's implicitly against public-option opponents? At the tweet level, is it pro, con, neither, or both?

Automated Sentiment Analysis

Regarding automated systems, Bing Liu says "acceptable accuracy and even the measure of it is quite tricky because sentiment analysis is a multi-faceted problem with several sub-problems. For most practical applications, they all need to be solved*** In terms of precision and recall of opinion orientation classification (not other sub-problems), I believe a precision around 90% will be sufficient, but some companies asked for near 100% precision based on my practical experience. (They need to be educated!) Recall is a slightly different issue. A reasonable value will be OK as one does not need to catch every sentence with opinions to find the problems of a product."

Mikko Kotila says leading providers such as Sysomos and Radian6 estimate their automated sentiment analysis and scoring system to be 80% accurate. Without citing examples, I asserted that many systems don't do even that well, not that they have to in order to be useful. But can anyone do better?

Dave Nadeau, creator of restaurant-review start-up InfoGlutton, can. According to Nadeau, InfoGlutton is trained on restaurant reviews from 25 sources for more than 100 restaurants. The proprietary corpus is made of 6,000 reviews totaling 40,000 sentences. Nadeau offers the statistics that:

"InfoGlutton sentiment analysis at sentence level is 89.5% accurate, with classifiers tuned for very high (~92%) precision for the positive and negative sentiments.

InfoGlutton sentiment analysis at review level is 94% accurate, with classifiers tuned for very high (~96%) precision for the positive and negative sentiments.

Accuracy Beyond Precision

My earlier Twitter examples allow me to introduce the notion that there's more to accuracy than classification precision. Accuracy in text analytics and search is typically computed from both precision and recall. Recall is the proportion of target features (documents, entities, whatever) found and the proportion of those found that were found correctly. Doesn't it go without saying that human methods will never match the recall, speed, and reach of automated methods?

What do you make of this tweet?

Le service apres vente de Toshiba est vraiment... MAUVAIS!!!! (Probleme: Regza LED)

Hint: "vraiment mauvais" is French for truly bad, and "le service après vente" is post-sales service. I'm sure you recognize "Toshiba" and I'd infer that "Regza LED" is a product. It's a computer's ability to find and analyze sources across languages, and to operate 24/7, scanning volumes of data that would overwhelm humans, that gives an edge to automation.

This example illustrates recall. If Toshiba (or their rivals) monitored only English-language sources, they would miss A LOT of relevant material. The tweet I offered above is in French; I can only guess at the volume of material posted in Japanese, Korean, Spanish, Arabic, Russian, and other languages. I'm not claiming that every automation solution can handle every language, nor that your efforts have to be exhaustive. But I will say that if your brand is multi-national, your recall, and thus your overall sentiment-analysis accuracy, are going to suffer without automation. Applications Beyond Monitoring

"Sentiment is nice to know, but up until today I haven't heard a single commercial application for it," writes Mikko Kotila. He's like Miranda in Shakespeare's Tempest, who grows up on an island with only her father, Prospero, for human company. Miranda sees survivors of a shipwreck and exclaims, "O brave new world / That has such people in't!" Prospero's response: "'Tis new to thee."

There are many sentiment-analytics applications with proven ROI; others will prove themselves soon if they haven't already. You just need to look beyond social/media monitoring and measurement to business analytics and operations, to functions that such as customer experience management. I'll cite just a few examples, provided by vendors. These examples are focused, but they're representative of experience shared by many users.

First, ROI isn't always measured in dollar terms. Clarabridge reports that for customer Gaylord Hotels, "Automated analysis of survey comments showed that customer experience was measurably enhanced when bell services staff accompanied lost guests to their destinations within a resort, as opposed to merely pointing them in the direction they needed to go. This insight led management to incorporate the process improvement into what Gaylord calls the 'Service Basics.'"

Other commercial applications range from innovations such as an "emotional tone checker" from Lymbix, which targets corporate communications functions, and a similar application from Adaptive Semantics that automates comment moderation for user-generated, online content; to use by consumer-goods companies such as Unilever for marketing-campaign analysis, as I describe in a 2008 article, Sentiment Analysis: A Focus on Applications.

As I say, there are many applications of sentiment analysis that are already delivering business value. For those many, disparate applications, how accurate is accurate enough? Requirements depend on business needs, and "good enough" is a very valid concept. If automated sentiment analysis can help spot one dissatisfied customer who would otherwise have been missed, that's a start. There's always room for improvement, however, and higher accuracy is certainly on the to-do list, for researchers and vendors as hinted at above, and also for practitioners who apply the technology.

Improving Accuracy

My 2008 article describes how consultancy Anderson Analytics applied text technologies as one of three parts of a "triangulation" process seeking to understand consumer sentiment surrounding the Unilever's Dove-brand pro.age marketing campaign. It's a truism, common wisdom, that you can usually boost analytical accuracy by applying multiple methods. You can link text-extracted information to transactional and operational records, for instance, to determine what service center staff our French Toshiba critic interacted with, or for a survey, you can collect sentiment both using numerical rating scales and free text. And of course, you can also explore steps to improve accuracy with particular techniques.

"Coming to a Theater Near You! Sentiment Classification Techniques Using SAS Text Miner," a paper by Jake Bartlett and Russ Albright of SAS Institute, describes the authors' attempt to improve accuracy in sentiment analysis of a collection of movie reviews. They looked at four variations on out-of-the-box use of SAS Text Miner and found they could reduce misclassifications by up to one-third, to 14.4%. Now of course, you don't just throw techniques at problems and hope they will help. Bartlett and Albright cite weightings, synonyms, and part-of-speech tagging and more complex extraction and feature manipulation techniques, but they suggest that part-of-speech tagging may not be effective if you don't have well-formed, grammatical text.

Learning More

I've presented what I hope is a systematic, balanced appraisal of the state of the accuracy and usefulness of automated sentiment analysis. If you'd like to learn more, there are a number of places you can continue. Check out:

Sentiment Analysis and Subjectivity, by Bing Liu.

Jan Wiebe's video on the same topic, Subjectivity and Sentiment Analysis.

The monograph Opinion Mining and Sentiment Analysis by Bo Pang and Lillian Lee

Finally, a plug: The Sentiment Analysis Symposium, April 13, in New York, will look at a broad set of sentiment-analysis approaches, business applications, solutions, and benefits. Please consider joining us for the conference.

About the Author

You May Also Like