5 Big Data Use Cases To Watch

Here's how companies are turning big data into decision-making power on customers, security, and more.

10 Hadoop Hardware Leaders

10 Hadoop Hardware Leaders (Click image for larger view and slideshow.)

We hear a lot about big data's ability to deliver usable insights -- but what does this mean exactly?

It's often unclear how enterprises are using big-data technologies beyond proof-of-concept projects. Some of this might be a byproduct of corporate secrecy. Many big-data pioneers don't want to reveal how they're implementing Hadoop and related technologies for fear that doing so might eliminate a competitive advantage, The Wall Street Journal reports.

Certainly the market for Hadoop and NoSQL software and services is growing rapidly. A September 2013 study by open-source research firm Wikibon, for instance, forecasts an annual big-data software growth rate of 45% through 2017.

[Digital business demands are bringing marketing and IT departments even closer. Read Digital Business Skills: Most Wanted List.]

According to Quentin Gallivan, CEO of big-data analytics provider Pentaho, the market is at a "tipping point" as big-data platforms move beyond the experimentation phase and begin doing real work. "It's why you're starting to see investments coming into the big-data space -- because it's becoming more impactful and real," Gallivan told InformationWeek in a phone interview. "There are five use cases we see that are most popular."

Here they are:

1. A 360 degree view of the customer

This use is most popular, according to Gallivan. Online retailers want to find out what shoppers are doing on their sites -- what pages they visit, where they linger, how long they stay, and when they leave.

"That's all unstructured clickstream data," said Gallivan. "Pentaho takes that and blends it with transaction data, which is very structured data that sits in our customers' ERP [business management] system that says what the customers actually bought."

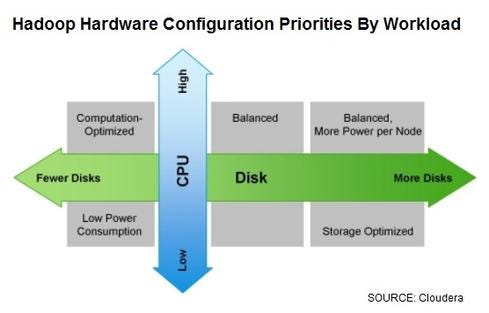

Figure 1:

A third big-source, social media sentiment, also is tossed into the mix, providing the desired 360 degree view of the customer. "So when [retailers] make target offers directly to their customers, they not only know what the customer bought in the past, but also what the customer's behavior pattern is… as well as sentiment analysis from social media."

2. Internet of Things

The second most popular use case involves IoT-connected devices managed by hardware, sensor, and information security companies. "These devices are

sitting in their customers' environment, and they phone home with information about the use, health, or security of the device," said Gallivan.

Storage manufacturer NetApp, for instance, uses Pentaho software to collect and organize "tens of millions of messages a week" that arrive from NetApp devices deployed at its customers' sites. This unstructured machine data is then structured, put into Hadoop, and then pulled out for analysis by NetApp.

3. Data warehouse optimization

This is an "IT-efficiency play," Gallivan said. A large company, hoping to boost the efficiency of its enterprise data warehouse, will look for unstructured or "active" archive data that might be stored more cost effectively on a Hadoop platform. "We help customers determine what data is better suited for a lower-cost computing platform."

4. Big data service refinery

This means using big-data technologies to break down silos across data stores and sources to increase corporate efficiency.

A large global financial institution, for instance, wanted to move from next-day to same-day balance reporting for its corporate banking customers. It brought in Pentaho to take data from multiple sources, process and store it in Hadoop, and then pull it out again. This allowed the bank's marketing department to examine the data "more on an intra-day than a longer-frequency basis," Gallivan told us.

"It was about driving an efficiency gain that they couldn't get with their existing relational data infrastructure. They needed big-data technologies to collect this information and change the business process."

5. Information security

This last use case involves large enterprises with sophisticated information security architectures, as well as security vendors looking for more efficient ways to store petabytes of event or machine data. In the past, these companies would store this information in relational databases. "These traditional systems weren't scaling, both from a performance and cost standpoint," said Gallivan, adding that Hadoop is a better option for storing machine data.

When it comes to managing data, don't look at backup and archiving systems as burdens and cost centers. A well designed archive can enhance data protection and restores, ease search and e-discovery efforts, and save money by intelligently moving data from expensive primary storage systems. Read our The Agile Archive report today. (Free registration required.)

About the Author

You May Also Like