In-Memory Databases: Do You Need The Speed?

IBM, Microsoft, Oracle, and SAP are ramping up the fight to become your in-memory technology provider.

Download the entire

Download the entire

March 3 issue of InformationWeek,

In-Memory Databases, distributed in an all-digital format.

Here are three surefire facts you need to consider about in-memory databases, along with one hard question those facts should lead you to ask.

First, databases that take advantage of in-memory processing really do deliver the fastest data-retrieval speeds available today, which is enticing to companies struggling with high-scale online transactions or timely forecasting and planning.

Second, though disk-based storage is still the enterprise standard, the price of RAM has been declining steadily, so memory-intensive architectures will eventually replace slow, mechanical spinning disks.

Third, a vendor war is breaking out to convert companies to these in-memory systems. That war pits SAP -- application giant but database newbie -- against database incumbents IBM, Microsoft, and Oracle, which have big in-memory plans of their own.

Which leads to the question for would-be customers: So what if you can run a query or process transactions 10 times, 20 times, even 100 times faster than before? What will that difference do for your business? The answer will determine whether in-memory databases are a specialty tool for only a few unique use cases, or a platform upon which all of your enterprise IT runs.

Pioneer companies are finding compelling use cases. In-memory capabilities have let the online gaming company Bwin.party go from supporting 12,000 bets per second to supporting up to 150,000. That's money in the bank. For the retail services company Edgenet, in-memory technology has brought near-real-time insight into product availability for customers of AutoZone, Home Depot, and Lowe's. That translates into fewer wasted trips and higher customer satisfaction.

[The software giants might have the inside track, but there's no shortage of innovative, alternative providers. Read In-Memory Technology: The Options Abound.]

SAP's Hana in-memory database lets ConAgra test new approaches for material forecasting, planning, and pricing. "Accelerating something that exists isn't very interesting," Mindy Simon, ConAgra's vice president of IT, said at a recent SAP event. "We're trying to transform what we're doing with integrated forecasting."

ConAgra, an $18 billion-a-year consumer packaged goods company, must quickly respond to the fluctuating costs of 4,000 raw materials that go into more than 20,000 products, from Swiss Miss cocoa to Chef Boyardee pasta. What's more, if it could make its promotions timelier by using faster analysis, ConAgra and its retailer customers could command higher prices in a business known for razor-thin profit margins.

"If there's a big storm hitting the Northeast this weekend, for example, we want to make sure that Swiss Miss cocoa is available in that end-cap display," Simon said. But a company can't do that quickly if it has to wait for overnight data loads and hours-long planning runs to get a clear picture of in-store inventories, available product, and the profitability tied to various pricing scenarios.

With its Hana platform, SAP has been an early adopter and outspoken champion of in-memory technology, but it certainly doesn't have a monopoly on using RAM for processing transactions or analyzing data. Database incumbents IBM, Microsoft, and Oracle have introduced or announced their own in-memory capabilities and plans, as have other established vendors and startups. The key difference is that the big three incumbents are looking to defend legacy deployments and preserve license revenue by adding in-memory features to conventional databases. SAP is promising "radical simplification," with an entirely in-memory database that it says will eliminate layers of data management infrastructure and cut the overall tab for databases and information management.

As the in-memory war of words breaks out in 2014, the market is sure to get confusing. To cut through the hyperbole, here's a close look at what leading vendors are promising and, more importantly, what early adopters are saying about the business benefits.

SAP's four promises

If you're one of the 230,000 customers of SAP, you've been hearing about in-memory technology for more than three years, as it's at the heart of Hana. SAP laid out a grand plan for Hana in 2010 and has been trumpeting every advancement as a milestone ever since. It's now much more than a database management system, packing analytics, an application server, and other data management components into what the vendor calls the SAP Hana Platform. At the core of SAP's plans are four big claims about what Hana will do for customers.

First, SAP says Hana will deliver dramatically faster performance for applications. Second, SAP insists Hana can deliver this performance "without disruption" -- meaning customers don't have to rip out and replace their applications. Third, SAP says Hana can run transactional and analytical applications simultaneously, letting companies eliminate "redundant" infrastructure and "unnecessary" aggregates, materialized views, and other copies of data. Fourth, SAP says Hana will provide the foundation for new applications that weren't possible on conventional database technologies. ConAgra's idea for real-time pricing-and-profitability analysis is a case in point.

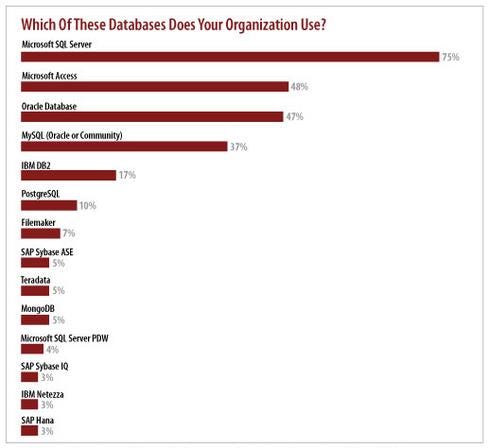

Figure 1:  (Source: InformationWeek 2014 State of Database Technology Survey of 955 business technology professionals)

(Source: InformationWeek 2014 State of Database Technology Survey of 955 business technology professionals)

The first and biggest challenge for SAP is persuading customers to use Hana in place of the proven databases they've been using for years. Roughly half of SAP customers run their enterprise-grade applications on Oracle Database. Most other SAP customers use IBM DB2 and Microsoft SQL Server, in that order. SAP says it has more than 3,000 Hana customers, but that's just over 1% of its total customer base. IBM, Microsoft, and Oracle combined have upward of 1 million database customers. The dominance of the big three is confirmed by our recent InformationWeek 2014 State of Database Technology survey (see chart).

The value of faster performance

Nobody questions that in-memory performance beats disk-based performance. Estimates vary depending on disk speed and available input/output (I/O) bandwidth, but one expert puts RAM latency at 83 nanoseconds and disk latency at 13 milliseconds. With 1 million nanoseconds

in a millisecond, it's akin to comparing a 1,200-mph F/A-18 fighter jet to a garden slug.

You can't capture this entire speed advantage because there are CPU processing time and other constraints in the mix, but disk I/O has long throttled performance. In-memory performance improvements vary by application, data volume, data complexity, and concurrent-user loads, but Hana customers report that the differences can be dramatic.

Maple Leaf Foods, a $5 billion-a-year Canadian supplier of meats, baked goods, and packaged foods, finds that profit-and-loss reports that took 15-18 minutes on an SAP Business Warehouse deployed on conventional databases now take 15-18 seconds on the Hana Platform. This is an analytical example demonstrating 60 times faster performance. Kuljeet Singh Sethi, CIO of Avon Cycles, an Indian bicycle manufacturer now running SAP Business Suite on Hana, said a complex product delivery planning process that used to take 15-20 minutes now takes "just a few seconds" on Hana. This is a transactional example demonstrating 300-400 times faster performance (if "a few" seconds is three).

What's important, though, is what that faster speed lets Maple Leaf and Avon do that they couldn't do before. For example, both companies are moving to near-real-time data loading instead of overnight batch processes, so they can support same-day planning and profitability analysis. This step could improve manufacturing efficiency and customer service, as well as simplify the data management processes themselves by eliminating the need for data aggregations.

Similar claims of performance gains come from outside the SAP camp. Temenos, a banking software provider that uses IBM's in-memory-based BLU Acceleration for DB2 (introduced in April), reports that queries that used to take 30 seconds now take one-third of a second, thanks to BLU's columnar compression and in-memory analysis. That speed will make the difference between showing only a few recent transactions online or on mobile devices and providing fast retrieval of any transaction, says John Schlesinger, Temenos's chief enterprise architect. Given that an online or mobile interaction costs the bank 10-20 cents to support, versus $5 or more for a branch visit, the pressure to deliver fast, unfettered online and mobile performance will only increase, Schlesinger says.

In contrast to SAP Hana, which addresses both analytical and transactional applications, BLU Acceleration focuses strictly on analytics. Microsoft has gone the other route, focusing on transactions with its In-Memory Online Transaction Processing (OLTP) option for SQL Server 2014, which is set for release by midyear. Tens of thousands of Microsoft SQL Server customers have downloaded previews of the product, which includes the in-memory feature formerly known as Project Hekaton.

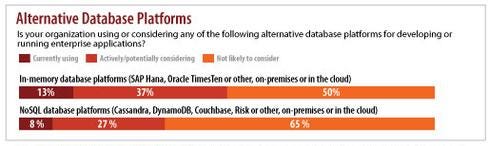

Figure 2:  (Source: InformationWeek 2013 Enterprise Application Survey of 263 business technology professionals with direct or indirect responsibility for enterprise applications)

(Source: InformationWeek 2013 Enterprise Application Survey of 263 business technology professionals with direct or indirect responsibility for enterprise applications)

Edgenet, a company that provides embedded software-as-a-service for retailers, started testing Microsoft's In-Memory OLTP two years ago, and it has let the company move from overnight batch loading to where it now offers near-real-time insight into store-by-store product availability. AutoZone, an Edgenet customer, carries 300,000 items across more than 5,000 stores. By putting product availability data on database tables running on In-Memory OLTP, Edgenet now provides inventory updates every 15 minutes. "If the retailer can send us data in a message queuing type process, we can report it in real time," says Mike Steineke, Edgenet's vice president of IT. "The only constraint is how quickly the retailer's backend systems can get us the data."

Without disruption?

In-memory seems promising, but most companies won't go there if it requires replacing applications. Like SAP, Microsoft has promised that customers will be able to move to In-Memory OLTP without such disruption (not counting the upgrade to SQL Server 2014). If true in practice, this promise would apply to the gigantic universe of applications that run on Microsoft SQL Server. SAP's promise applies mainly to SAP customers and the smaller universe of SAP applications.

Bwin.party, which is known for online gambling games such as PartyPoker, wouldn't have moved to in-memory if it required major application revisions. It wanted to scale up its online sports betting business three years ago, but it was bumping up against I/O constraints on SQL Server 2008 R2. "We were able to swap out the database without touching the underlying application at all," says Rick Kutschera, manager of database engineering. Not having to change queries or optimize apps for in-memory tables means Bwin.party can quickly scale up its betting operations. That flexibility came in handy in 2013, when New Jersey legalized online gambling and Bwin.party was able to launch gaming sites in partnership with casinos in Atlantic City.

Both Kutschera of Bwin.party and Steineke of Edgenet say they also implemented In-Memory OLTP without server changes. They could do so because they moved only selected tables requiring RAM speed into memory. (SAP Hana, by contrast, puts the entire database in memory, so it requires dedicated, RAM-intensive servers.) The coming release of Microsoft SQL Server 2014 promises tools that automatically determine which tables within a database would most benefit from in-memory performance, so that administrators can take best advantage of the new feature.

Oracle announced in October that it will introduce an in-memory option for its flagship Oracle Database 12c. (Oracle acquired the TimesTen in-memory database vendor way back in 2005, but that 18-year-old database is used mainly in telecom and financial applications.) CEO Larry Ellison has

hinted that Oracle will release the in-memory product early next year, and he's already assuring customers that it will not be disruptive. "Everything that works today works with the in-memory option turned on, and there's no data migration," Ellison said at Oracle OpenWorld 2013 in September.

That "migration" comment is aimed at SAP, which insists that switching from Oracle to Hana isn't difficult. But technical guidance on SAP's site suggests that an SAP Business Warehouse migration isn't as simple (no surprise) as upgrading from Oracle 11g to Oracle 12c.

Last year, SAP offered the option of letting companies run their entire SAP Business Suite (including ERP, CRM, and other transactional applications) on the Hana platform. SAP says more than 800 customers have committed to making that move, and at least 50 have done so. Avon Cycles moved Business Suite from Oracle to Hana last fall. Shubhindra Jha, Avon's IT manager, says the complete process, including planning, hardware installation, testing, and production rollout, took 90 days, with only a couple of hours of downtime. However, Avon is a relatively small company, with 1,800 employees and 75 ERP users.

SAP vs. the incumbents

Make no mistake: The promises from Oracle, IBM, and Microsoft are quite different from the ambitious aspirations of SAP. Avoiding disruption is crucial for the three incumbents, because they want to keep and extend their business with database customers.

SAP isn't trying to protect legacy databases and data management infrastructure, so it's encouraging companies to get rid of databases and other "redundant" infrastructure through what it calls "radical simplification." SAP's pitch is that, by running both transactional and analytical applications in Hana, entirely in-memory, companies can dump separate data warehousing, middleware, and application server infrastructure, as well as data aggregates and other copies of data invented to get around disk I/O bottlenecks.

IBM, Microsoft, and Oracle aren't proposing that you get rid of any databases or data warehouses -- only that you upgrade to their latest products to add their new in-memory options. What's more, they have separate products for transactional and analytical workloads that can create yet more copies of data and require yet more licenses.

IBM's BLU Acceleration is for analytics, but IBM also offers all-flash storage arrays to eliminate disk I/O bottlenecks and speed transactional applications and database performance. Flash isn't as fast as RAM, but it's much faster than disk. IBM says these arrays cost far less than adding in-memory database technology and still can reduce transaction times by as much as 90%.

Microsoft provides PowerPivot and Power View plugins for Excel as its answer for in-memory analytics, but this client-side approach can create disconnected islands of analysis with disparate data models and versions of information from user to user.

Oracle's answer for in-memory analytical performance is Exalytics, but this caching appliance overlaps with its Exadata machine, creates more copies of data, and requires a TimesTen or Essbase in-memory database license. The Oracle Database 12c In-Memory Option isn't expected to be available in general release until next year at the earliest.

SAP's Hana Platform looks cleaner and simpler on paper, but we haven't yet seen much evidence of "radical simplification." SAP is the only company we know of running almost all its applications on the platform. All the companies we've interviewed or read about are picking and choosing what they run on Hana.

Avon Cycles, for example, runs its Business Suite on Hana, but it says it won't migrate Business Warehouse until it's sure it can replicate all its business intelligence queries and reports -- with adequate historical data. Queries and reports, after all, tend to run against aggregates, materialized views, cubes, and other artifacts of the disk age, so you can't get to "radical simplification" without refactoring reports and queries to run entirely in-memory.

Maple Leaf Foods is migrating an analytical Business Planning and Consolidation application from Oracle on to Hana, but it has no plans to migrate its entire SAP data warehouse running on Oracle on to the Hana platform. It's still running Hana in a "side car" deployment alongside the warehouse. "We only accelerate the data that creates value, so we're picky about what we move and the amount of data we move over to the sidecar, whether that's one year's, two years', or three years' worth of data," says Michael Correa, vice president of information solutions at Maple Leaf. "We've looked at the possibilities for CRM on Hana, and we're intrigued by the idea of putting the entire Business Suite on Hana, but it's not a priority for us either in terms of acceleration or simplicity for the next couple of years."

Most companies will follow this line of thinking as they ask the hard questions: What's the cost and risk of faster processing, and do the differences drive lower costs or higher revenue? IT will answer those questions on a case-by-case, function-by-function basis. Keep in mind that these in-memory options generally demand upgrades to the latest versions of the vendor's software, whether that's SAP BW or Business Suite, IBM DB2, Microsoft SQL Server, or Oracle Database. That cost alone will push back implementation for many companies. So expect the in-memory war to heat up in 2014, but the battle to play out over years to come.

Download the entire March 3 issue of InformationWeek,

In-Memory Databases.

You can use distributed databases without putting your company's crown jewels at risk. Here's how. Also in the Data Scatter issue of InformationWeek: A wild-card team member with a different skill set can help provide an outside perspective that might turn big data into business innovation (free registration required).

About the Author

You May Also Like