Hortonworks Deploys Hadoop Into Public Clouds

Hortonworks Data Platform 2.3 contains ease-of-use features, including automated cluster building and deployment into AWS, Azure, or Google.

6 Ways To Master The Data-Driven Enterprise

6 Ways To Master The Data-Driven Enterprise (Click image for larger view and slideshow.)

Hortonworks' latest release of its Hadoop system adds several key features, including guided configuration of the Hadoop Distributed File System, a dashboard with key performance indicators for Hadoop operations, and automated discovery for adding new servers to the cluster.

It's added encryption for data at rest, previously available only for data in motion, "removing another obstacle to enterprise adoption," said Shaun Connolly, VP of corporate strategy at Hortonworks.

Removing obstacles to enterprise adoption is the theme of the enhancements included in the 2.3 release of Hortonworks Data Platform. It was announced Tuesday, on the eve of the two-day Hadoop Summit in San Jose, Calif., this week. It features greater ease of implementation, monitoring, and management -- all intended to make Hadoop more acceptable in the enterprise

One interesting feature now available is Hortonworks SmartSense, a monitoring service for users of Hadoop systems that's available whether Hadoop is running on-premises or in a public cloud such as Amazon Web Services.

SmartSense collects configuration and performance data on a running Hadoop engine out of the server's log files. It collects it for subsystems as well, such as Apache Spark for streaming data to Hadoop servers or Apache YARN for managing a Hadoop cluster. Connolly said customers may simply add SmartSense to their existing subscriptions to gain its monitoring results.

Hortonworks collects a customer's data from log files through SmartSense and loads it into its technical support Hadoop cluster for analysis. With the results, the support team "can offer proactive guidance on how to tune and optimize for performance," Connolly said in an interview.

[Want to learn more about Hadoop systems suppliers? See Big Data Tempest In A Teapot.]

Other enhancements include a new user interface for the enterprise chief security officer or administrator, based on Apache Ranger, for implementing security measures. Instead of needing to learn the commands of the Hadoop command line, security administrators can set up policies to control access to Hadoop Data Platforms and its subsystems, including HBase, the NoSQL system that runs on top of the Hadoop Distributed File System. HBase functions like Google's BigTable, storing data for input into or output from MapReduce, in using analytical functions on large amounts of data.

The Atlas metadata system is now part of the 2.3 release of the Hortonworks platform. At the start of the year, Hortonworks initiated the Atlas project, which was accepted in April as an Apache incubator project. Hortonworks enlisted Aetna, Merck, Target, JP Morgan Chase, and Schlumberger to work on Atlas as a subsystem that would generate metadata around Hadoop data, allowing data sets to be tagged to capture where they originated and who handled them along different steps of their use.

Each of the companies has assigned four to five engineers to work on Atlas, "which helps us make sure were solving real enterprise problems," said Connolly.

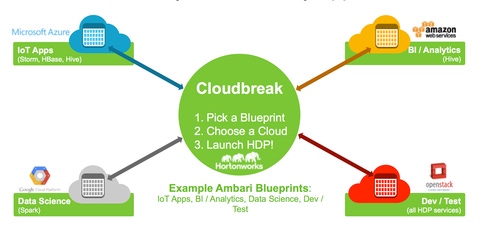

Another new feature flows out of Hortonworks' acquisition of SequenceIQ in April. Its CloudBreak tool allows a user to rapidly deploy a Hadoop cluster into a public cloud environment. Its target may be Amazon Web Services, Microsoft Azure, or the Google Cloud Platform. Connolly said CloudBreak understands the differences between the services. Told to establish a 100-node cluster, it will authorize the first 10 nodes, then continue adding as the user establishes tasks on the first 10.

"You can start an analytical process without waiting for all 100 nodes to deploy" an instant service that's popular with developer users of Hadoop, he said.

Guided configurations available with release 2.3 make it easier to implement the Hadoop Distributed File System, Hive data warehousing, HBase, and YARN. They make installation faster and more predictable, said Connolly.

An operations dashboard can be given parameters and display key performance indicators to the Hadoop administrator.

As new servers become available in a cluster, release 2.3's automated discovery finds them and integrates them into operations.

Hortonworks posted $22.8 million in revenue at the end of its first quarter, on May 12, a 167% increase over Q1 in 2014. It also lost $40.5 million in the quarter.

Hortonworks was spun out of Yahoo in June 2011, with $23 million in backing from Benchmark Capital and Yahoo. Yahoo is a big user of Hadoop. At the time of Hortonworks' launch it had 18 Hadoop systems running on 42,000 servers. That funding was followed by $25 million from Index Ventures in November of that year.

Other Hadoop suppliers include Cloudera, MapR, and the Apache Software Foundation.

About the Author

You May Also Like