Informatica Self-Service Platform Spots Sensitive Data

Informatica unveils its Intelligent Data Platform roadmap for clean, secure self-service data, even at big data scale.

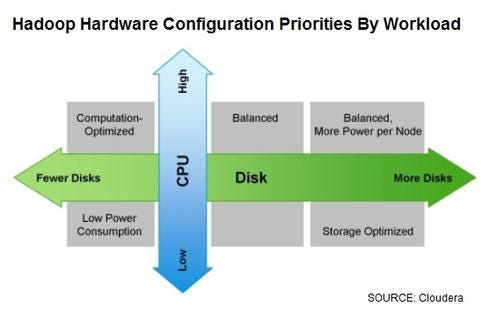

10 Hadoop Hardware Leaders

10 Hadoop Hardware Leaders (Click image for larger view and slideshow.)

Victory won't go to those with the most data. It will go to those who make the best use of data. This simple principle is behind Informatica's Tuesday unveiling of the Intelligent Data Platform.

A collection of current and planned capabilities, the Intelligent Data Platform seeks to exploit Informatica's unique insight not into the data itself -- that's the job of BI and analytics -- but into the metadata, the data about the data.

"Data has become a boardroom issue," Marge Breya, Informatica's chief marketing officer, said in a briefing with a handful of editors and analysts in New York. "We know all the paths of data access and the data types. We know where all the PCI [credit/debit card], HR, and privacy-sensitive data resides, where it has been, and where it has been moved."

[Want more on big data analysis? Read Datameer Bets Visual Analysis Beats SQL On Hadoop.]

Informatica says it will take advantage of this knowledge with Data Self-Service for Business Users and Secure@Source, two of the new platform components. Data Self-Service for Business Users is a cloud-based, search-style interface that will help users find appropriate internal and external data sources with the aid of algorithms and recommendation engines. "Smart" provisioning will ensure that data access is provided only to those with appropriate rights and privileges. Data Self-Service is expected to debut this year.

Secure@Source is an audit and compliance tool that will complement, rather than compete with, security technologies, according to Informatica. Set for release next year, Secure@Source is designed to help organizations narrow down where sensitive data resides, physically and logically, and then prioritize which stores need to be better secured with which types of security technologies.

"This is an application we're developing on top of the Intelligent Data Platform, and it plugs into the metadata we already have access to," Anil Chakravarthy, executive vice president and chief product officer at Informatica, said during the briefing in New York. Chakravarthy joined Informatica late last year from Symantec, where he was executive vice president of information security.

A third new leg of the Intelligent Data Platform is Shared Data Lake, which will provide a mechanism to collect, discover, refine, govern, provision, securely share, and control all data assets and internal and external feeds -- once again tapping Informatica's metadata insight. Informatica describes it as "an easy, one-stop shop for data search, discovery, and consumption," whether the source systems are batch or real-time. Think of it as a virtual data lake with a self-service UI for business analysts.

The Intelligent Data Platform will make use of current Informatica capabilities, including its venerable PowerCenter data integration technologies and the Informatica Vibe embeddable virtual data machine. The latter supports reuse of data integration mappings created for users or departments across cloud environments, enterprise data centers, and big data platforms such as Hadoop.

Informatica also announced Tuesday that Western Union, the global money transfer business, is using the integration vendor's technology to power a year-old Hadoop deployment that's ensuring secure and compliant transactions across its global network of 500,000 retail service points and its growing Internet and mobile channels.

Western Union manages roughly 100 terabytes of data generated by 700 million transactions per year (about 29 per second worldwide). About 60% of that data is unstructured, including web and mobile clickstreams and log-file data from Splunk that the company started collecting in a Hadoop cluster last year. The other 40% is structured transactional data that Western Union has long analyzed in a conventional data warehousing environment.

By correlating the newer, variably structured data sources with structured transaction data, Western Union can tell the difference between transactions that are likely to be safe and secure and those likely to be fraudulent.

"We need to be able to mine the data as close to real-time as possible, so now we're tweaking the models daily," Western Union CTO Sanjay Saraf said in a phone interview with InformationWeek.

Western Union used to write code to move data into its Hadoop cluster, but that could take days. In recent months, the company has standardized on Informatica Data Replication (IDR) as a tool to push data from Splunk and other sources down into Hadoop.

"It used to take four or five days before we'd get log-file data into Hadoop, but with IDR, we can extract and get it into HDFS and specific Hive tables in less than an hour," Saraf said.

Like many other Hadoop users, Western Union has reduced ETL costs by moving certain data transformation tasks into Hadoop, but Saraf views that trend as more of a threat to the "Oracles and [IBM] Netezzas" of the world. "Informatica is participating in the Hadoop ecosystem."

Our InformationWeek Elite 100 issue -- our 26th ranking of technology innovators -- shines a spotlight on businesses that are succeeding because of their digital strategies. We take a close at look at the top five companies in this year's ranking and the eight winners of our Business Innovation awards, and we offer 20 great ideas that you can use in your company. We also provide a ranked list of our Elite 100 innovators. Read ourInformationWeek Elite 100 issue today.

About the Author

You May Also Like