Data Governance

Regulation on Artificial Intelligence Laws as technology for AI changing legal and ethical issues for copyright and intellectual property implications

Machine Learning & AI

Asking Nicely Might Solve Generative AI’s Copyright ProblemAsking Nicely Might Solve Generative AI’s Copyright Problem

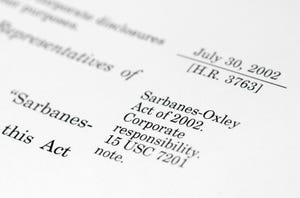

Not only does licensing data for AI development provide the obvious benefit of avoiding protracted (and public) battles on the world stage, but it also provides many convenient side benefits.

Never Miss a Beat: Get a snapshot of the issues affecting the IT industry straight to your inbox.

.jpg?width=300&auto=webp&quality=80&disable=upscale)